Developers and Deployers New to Public Engagement

The race to develop artificial intelligence is accelerating at an unprecedented pace. AI products are being deployed at unprecedented speed—often without the necessary safeguards in place. Systems are being released into the world before they’ve been thoroughly tested for risks, unintended consequences, or potential harm. This rapid pace of innovation presents a critical challenge: how do we ensure that AI remains safe, ethical, and relevant to those it affects most? Part of the answer lies in meaningful public engagement

In the mad dash toward progress, public engagement is frequently sidelined, viewed as a bottleneck rather than a necessity. This oversight is particularly concerning as AI development shifts from narrowly focused, task-specific models to powerful, general-purpose foundational models. As we move towards greater development and adoption of agentic systems and AI assistants, public engagement will be particularly important.

Different AI systems require different levels of engagement, depending on factors like the type of data they rely on, how their parameters are set, and how easily they can be explained to the public. And as we move into an era where AI-powered assistants and autonomous systems become more embedded in our daily lives, engaging with a diverse array of users and other impacted people will become even more critical.

Among those whose voices matter most are socially marginalized communities—groups that have long been overlooked in political, social, and institutional decision-making. Their perspectives are not only valuable but essential. Consider, for instance, individuals with disabilities who rely on assistive technologies daily. Their lived experiences provide critical insights into both the challenges and possibilities of AI-driven accessibility tools—insights that might be entirely invisible to those who have never needed such technologies. The improvements made based on their insights provide better products for everyone. Closed captioning, for example, not only ensures people with hearing difficulties have access to spoken content, but is a convenience for those who may not be able to access audio.

Without intentional and inclusive engagement, AI risks reinforcing existing inequalities and failing the very people it was meant to serve. The question is no longer whether public engagement should be part of AI development—but rather, how we can make it a fundamental and non-negotiable step in shaping the future of AI.

Download the GuidelinesWhat is public engagement?

At its core, public engagement refers to the process of involving individuals, communities, and organizations that are directly or indirectly affected by AI technologies in their development. This ensures that the technology is not only functional and marketable but also ethically sound and socially responsible.

Public engagement moves beyond traditional user research by actively involving diverse perspectives in decision-making throughout the AI lifecycle. This includes consultation, collaboration, and co-creation with a range of voices, particularly those from marginalized and vulnerable communities who might face the greatest impact—both positive and negative—of AI deployment.

Insights Gained through Public Engagement

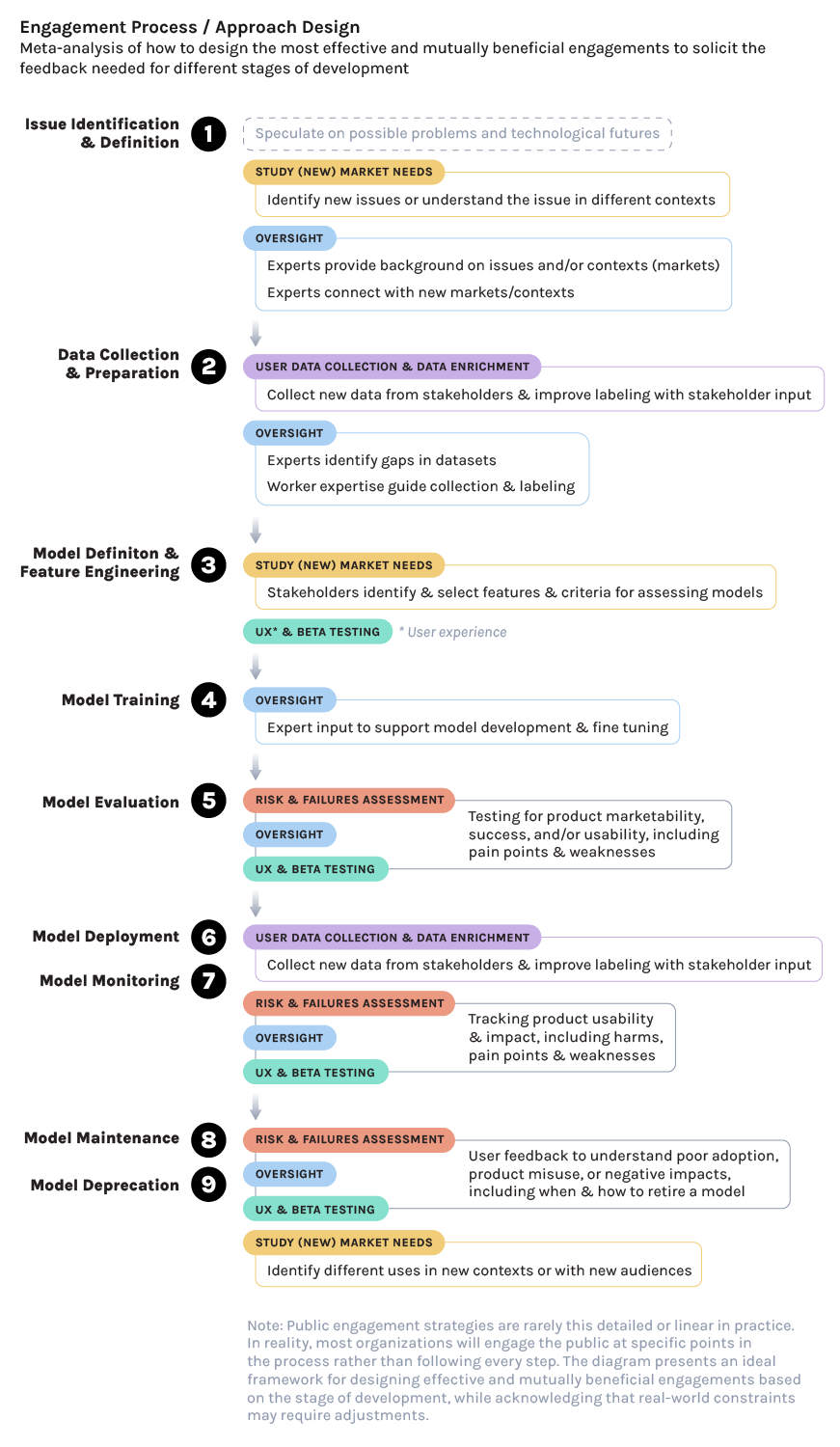

Public engagement can be conducted throughout the AI development lifecycle in order to elicit different kinds of insights to improve products and services or to mitigate potential harms. Broadly speaking, the different “use cases” or purposes for public engagement often include:

The purpose is to better understand an existing or potential market of users, customers, or people otherwise impacted by a product or service. A concept may not yet be in development and the aim may be to develop a foundational understanding of what a set of potential users may and may not want in an AI-driven product or service. Public engagement can help to better understand

- nuances between existing consumer markets and new potential markets

- issues a consumer market is facing that may support an AI-driven solution (including whether or not an AI-driven solution is even needed for that market or audience)

Product teams may conduct early-stage market studies before a new product/feature is fully designed or built. These market studies may be conducted in the form of desk research of existing knowledge and studies, focus groups (group interviews), individual interviews, mass market surveys, and consultations with experts.

[LIFECYCLE DIAGRAM]

Public Engagement Approaches and Activities

Public engagement activities include things like:

- Focus groups or group meetings: facilitated discussions where individuals are asked to talk about something as a group

- Participatory workshops: a series of facilitated discussion and activity sessions for a designated group

- Qualitative interviews: individual interviews where participants are asked a similar battery of questions

- User testing: participants are invited to use the product/service and give direct feedback about their experience

- Diary studies: participants are invited to use the product/service over an extended period of time and maintain a regular diary where they note their observations

- Surveys and questionnaires: distributing structured or semi-structured surveys to gather quantitative and qualitative data on public opinions, preferences, and experiences

- Community advisory boards: establishing a one-time or ongoing advisory groups composed of community representatives who meet regularly to provide input on specific products

- Public consultations: structured processes where participants are invited to review and comment on proposed policies, plans, or products before final decisions are made

- “Hack-a-thons” or innovation or red teaming exercises: intensive, time-bound events where diverse teams of participants collaborate to develop innovative solutions to specific challenges related to a product or service

- Listening tours: conducting visits to various communities to listen to their concerns, needs, and perspectives in their own environments

Why does public engagement matter?

Imagine launching an AI product that seems flawless during production—only to find, once it reaches market, that it overlooks critical risks, alienates key user groups, or even causes harm. This scenario is more likely than you might think, and it often stems from one major oversight: failing to engage the various groups of impacted people early in the development process.

Working with users and other impacted members of the public isn’t just a box to check—it’s a strategic advantage. It fuels innovation, strengthens products, and ensures AI serves people in ways that are ethical, sustainable, and effective. By involving a diverse range of voices, companies can identify risks before they escalate into real-world consequences. Take, for instance, workers and labor organizations. Consulting them before deploying AI-driven automation can reveal hidden threats to job security, worker rights, and overall well-being—issues that might otherwise go unnoticed until it’s too late.

But the benefits go far beyond risk mitigation. Meaningful public engagement broadens developers’ understanding of the historical and social contexts in which their technology will operate. It ensures that AI products are designed not just for theoretical users, but for the full spectrum of people who will interact with them in their daily lives. Without this insight, developers risk missing crucial aspects of the problem space, leading to solutions that don’t actually solve the right problems.

And here’s the final, often underestimated, advantage: trust. When companies actively involve the public—whether they’re users, advocacy groups, or impacted communities—they foster a sense of shared ownership over the technology being built. This trust can translate into stronger consumer relationships, greater public confidence, and ultimately, broader adoption of AI products.

-

- To identify issues that can be resolved through – or markets that can be served by – the application of AI technologies (or when AI-driven technological innovation is not the best approach)

- To identify potential risks and harms, as they may arise for different (groups of) people

- To identify circumstances where the technology does not work as intended

- To identify friction points that make the technology difficult to use or inaccessible for certain people

- To align with general democratic principles and promote greater access and adoption of new technologies

Additionally, individuals, teams, and organizations may elect to engage with the public for a number of reasons:

Understanding these different incentives can help you better advocate for public engagement and set realistic expectations for its outcomes with decision makers and leadership in the organization. In addition, identifying common ground between an organization’s incentives for public engagement and the benefits of integrating public input at different stages of AI development is crucial.

Download the Guidelines