Decoding AI Governance: A Toolkit for Navigating Evolving Norms, Standards, and Rules

![$hero_image['alt']](https://partnershiponai.org/wp-content/uploads/2025/07/governance-labyrinth-1800x832-1.webp)

Published jointly with Microsoft

Coherence among governance efforts is key to unlocking AI opportunity, particularly in a shifting geopolitical environment. It reinforces governance priorities and simplifies implementation; hastens and deepens shared expectations for best practices and assurance; and strengthens partnership among diverse stakeholders, including AI developers and deployers, policymakers, and civil society. When governance is strong, trust follows—and with it, broader and more responsible use of AI for society’s benefit.

In AI governance, clarity and alignment is needed to avoid negative outcomes from fragmentation and geopolitical volatility. Across history, shared frameworks have fueled progress toward coherence—by providing common language, enhancing coordination, and defining collective building blocks towards desired outcomes.

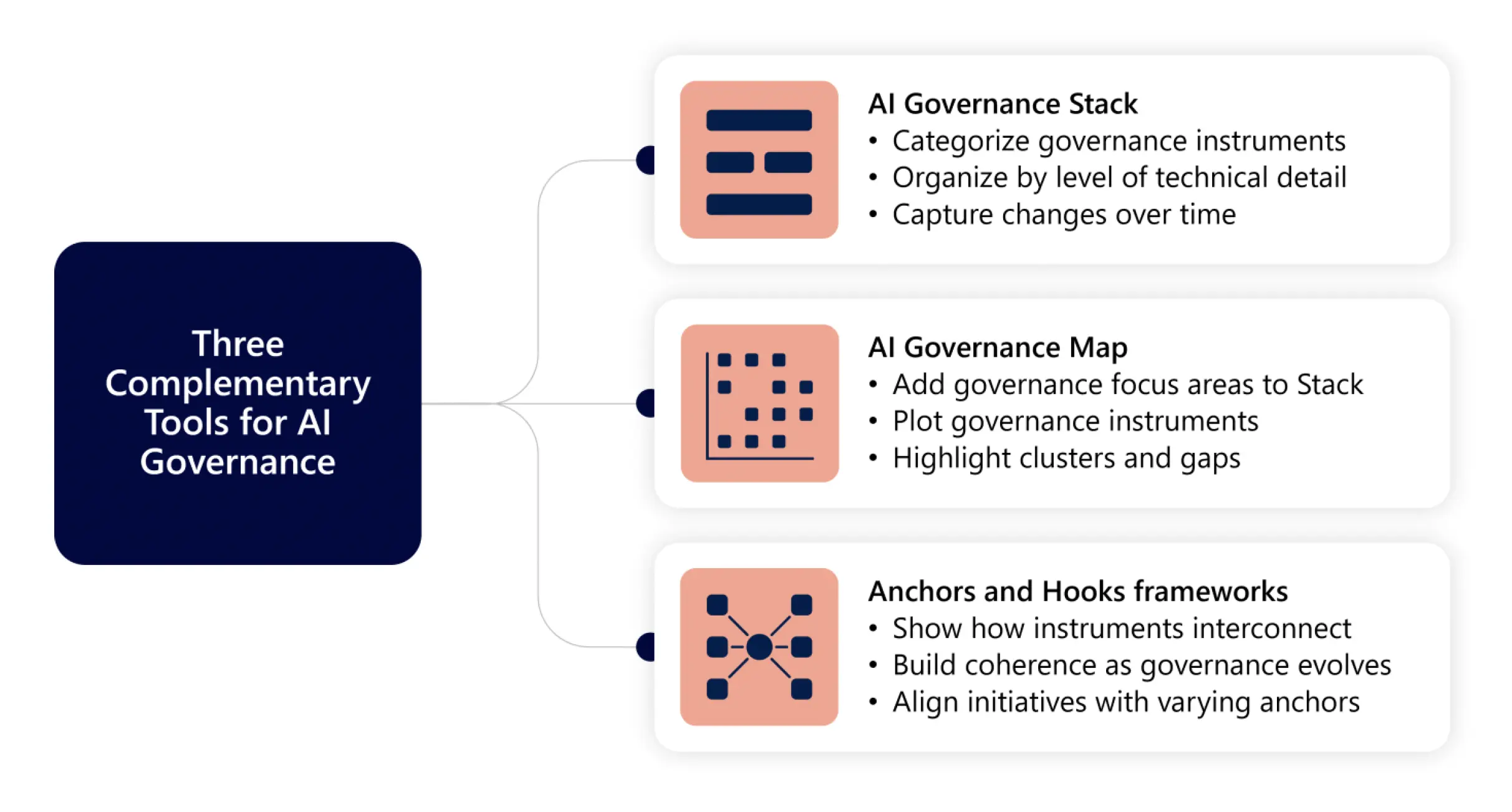

Building on recent momentum, like the French international AI governance statement and cartography, and recognizing a need for shared frameworks to help stakeholders understand and coherently shape a dynamic AI policy landscape, we are introducing and seeking feedback on three conceptual tools:

- the AI Governance Stack

- the AI Governance Map

- Anchors and Hooks frameworks

Together, they address the why, what, how, and who of governance.

As elaborated in a Zero Draft Paper (download above), these tools support shared understanding, identify gaps and opportunities, and promote greater interoperability across AI governance efforts. They’re designed to evolve with the landscape—and to help those shaping it do so with greater clarity, coordination, and impact.

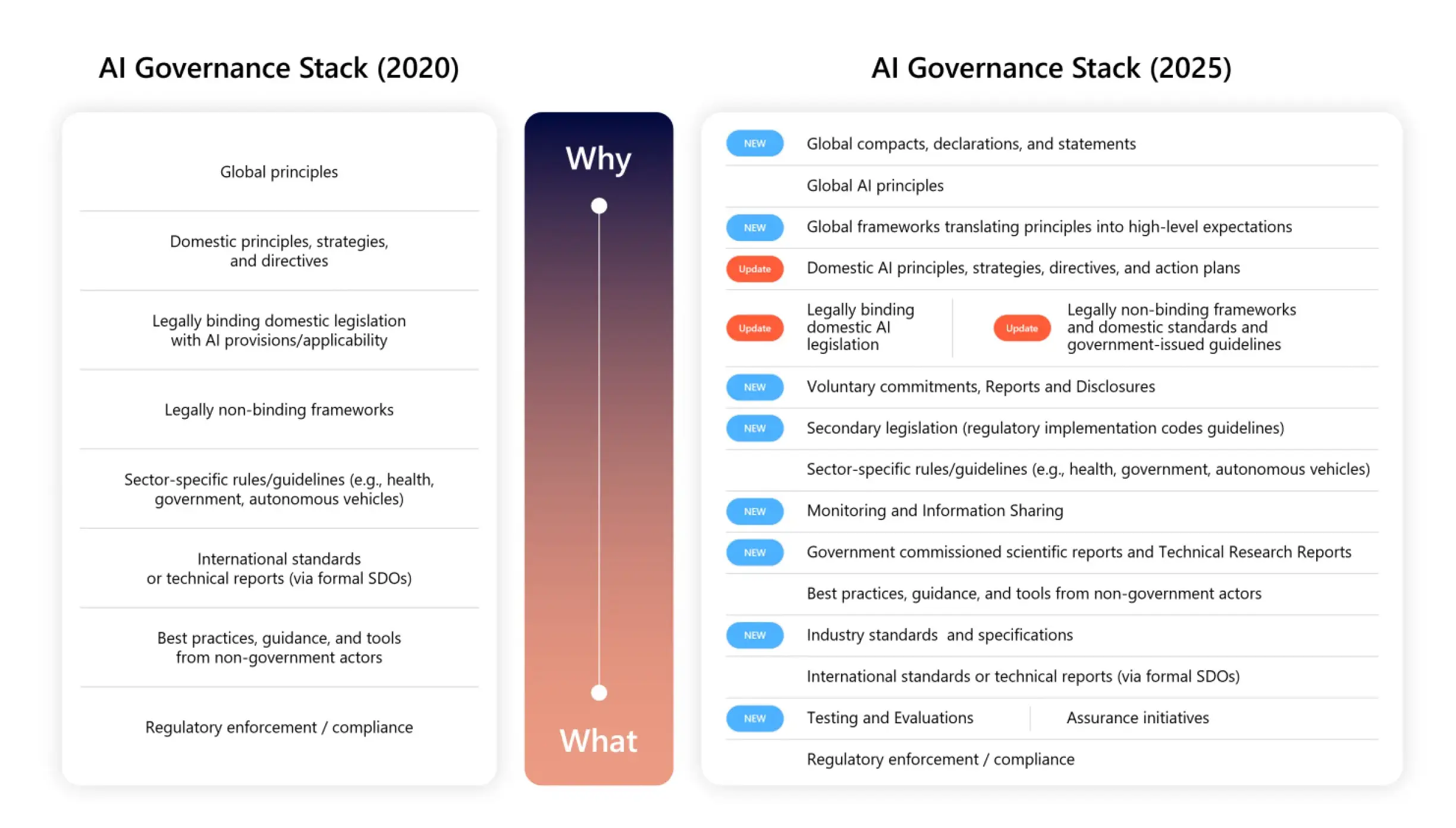

The Stack: Understanding How We’re Governing

The AI Governance Stack is a conceptual framework that organizes governance instruments—i.e., the “how,” such as multilateral declarations, domestic legislation, and industry standards—by their level of abstraction and technical detail.

- Top of the stack: Most abstract and aspirational instruments (e.g., multilateral declarations) that reflect shared values—the “why” of AI governance.

- Middle layers: Mid-level instruments (e.g., legislation) that define and explore the “what,” i.e., broad areas of focus (e.g., transparency), as governance priorities.

- Bottom of the stack: Most technically and operationally detailed instruments (e.g., industry standards), supporting practical implementation of the “what.”

As the versions of the Stack in the Zero Draft Paper illustrate, instruments can be linked to the governance actors that develop them and the “functions” that different layers of the stack play (e.g., issue identification versus issue definition). The Stack thus helps capture and make sense of the proliferation of governance instruments and actors, showing how new layers, types of instruments, and actors emerge and how functions overlap or interrelate with those at other layers. By visualizing these changes, the Stack makes it easier to spot trends, redundancies, or missing links.

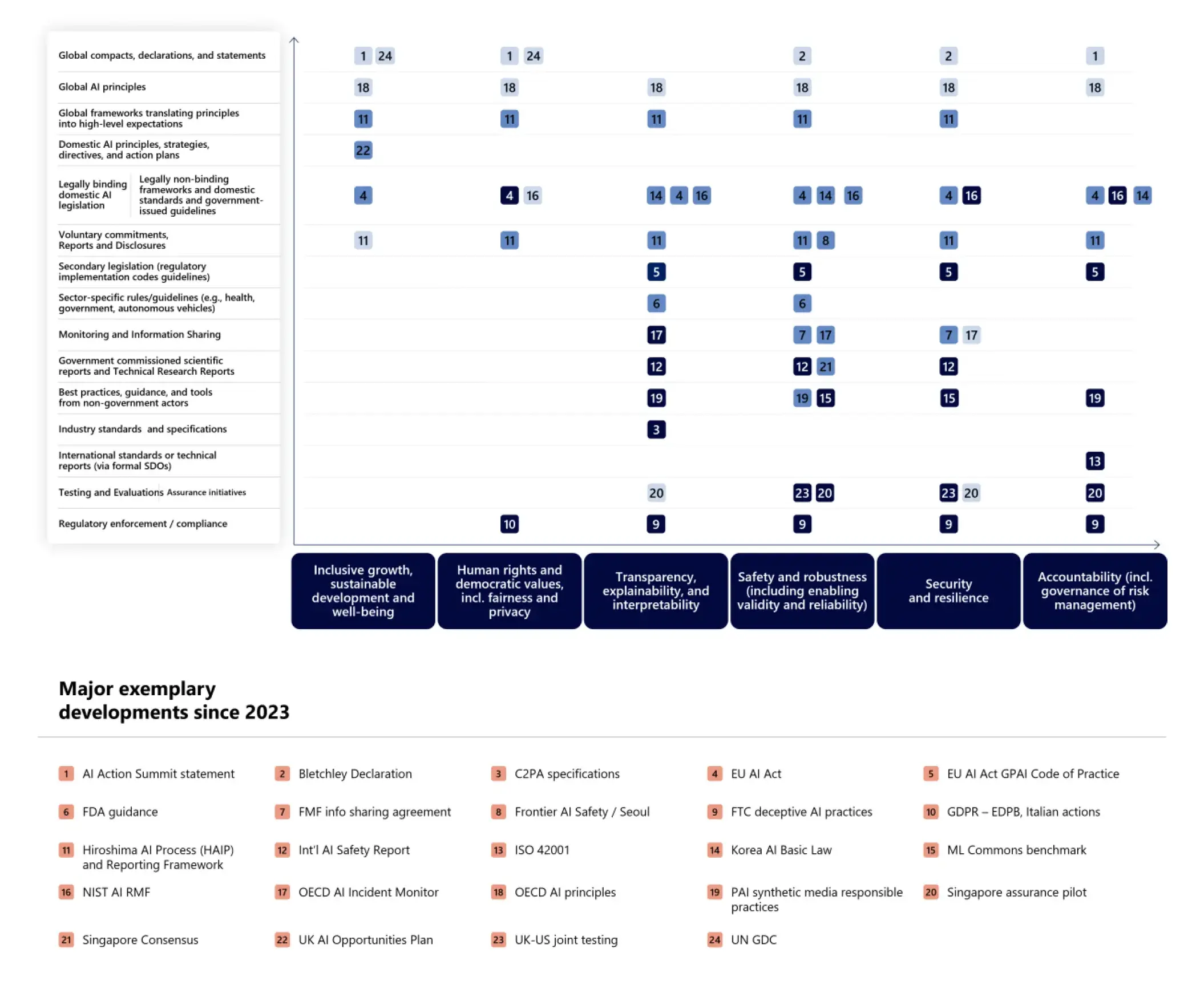

- Y-axis: Layers of governance instruments, drawn from the Stack.

- X-axis: Governance focus areas (e.g., transparency). These can be drawn from the high-level framing of widely endorsed or applicable frameworks from the Stack’s upper and/or middle layers. Below, we’ve leveraged the OECD AI Principles and the characteristics of trustworthy AI from the NIST AI Risk Management Framework.

By plotting instruments on a two-dimensional map, stakeholders can identify where activity is concentrated and where there may be gaps. For instance, some focus areas may have abundant legislative activity but few technical standards—or vice versa. This structured view helps stakeholders target underdeveloped areas, avoid duplication, and identify and address clusters—a concept unpacked in the Zero Draft Paper. The Map provided here is far from exhaustive, highlighting some key developments since 2023 with broader relevance (at the expense of, e.g., important sectoral developments).

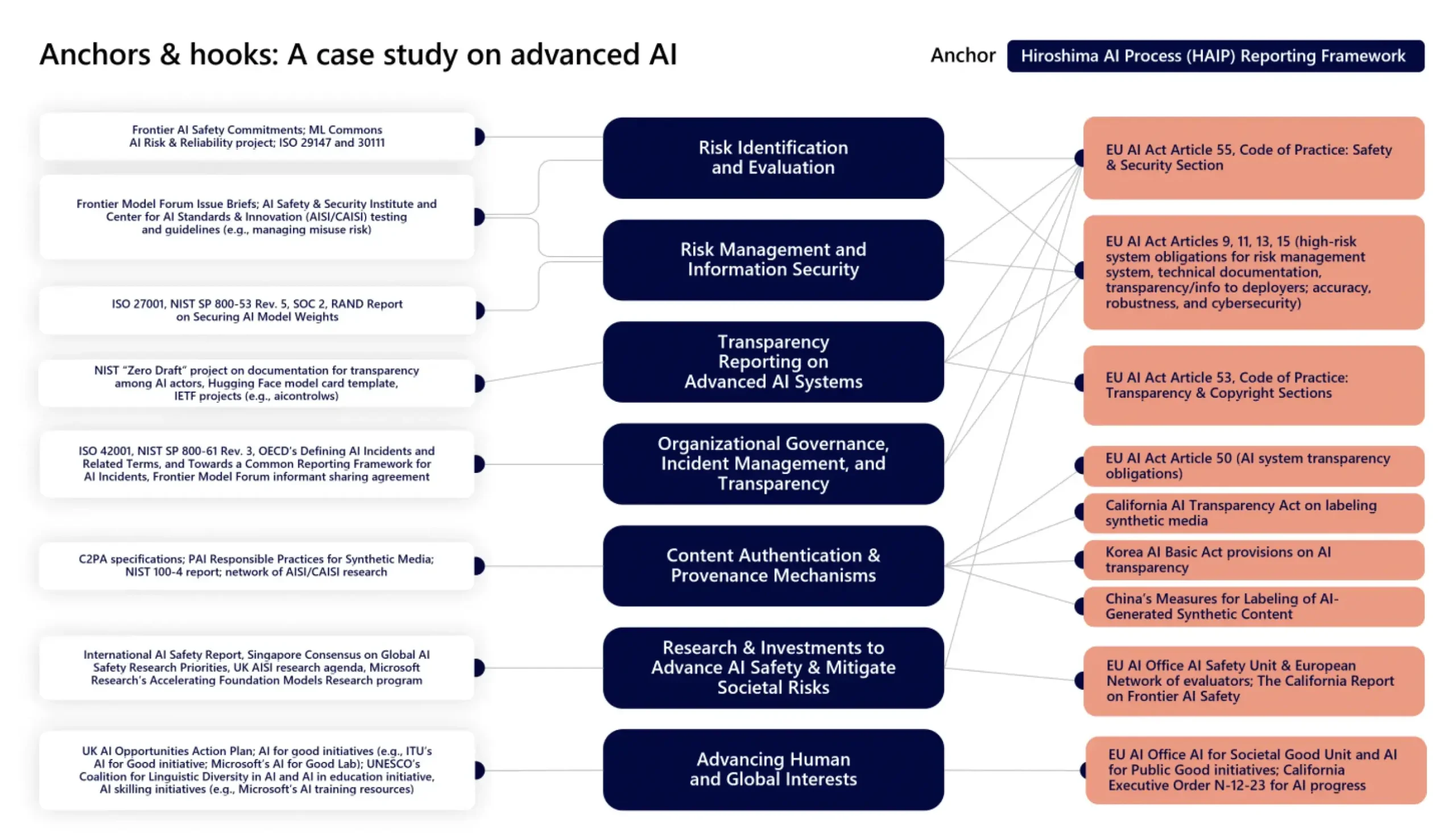

Anchors and Hooks: Connecting the Dots across Who Governs What and How

An Anchors and Hooks framework can be used to support AI governance coherence by studying clusters or gaps on the Map. It can show how instruments interrelate (or not) by drawing connections between those already positioned on the Map—or how gaps might be addressed by linking to activity in other focus areas or other layers of the Stack.

- An Anchor is an instrument that provides foundational context for governance efforts.

- A Hook is an instrument that builds from an Anchor to other areas on the AI Governance Map, across the Stack or focus areas.

An Anchors and Hooks framework recognizes that, in a complex and evolving landscape, coherence can be achieved in multiple ways. Some governance efforts build upward from technical standards; others move top-down from more abstract instruments—as demonstrated by two different frameworks in the Zero Draft Paper [link to download]. Different actors may treat different instruments as Anchors or Hooks within an interconnected system.

The framework is particularly useful when navigating risks of fragmentation. By tracing how instruments are linked, stakeholders can coordinate more effectively and identify opportunities to build on existing work. As in the below example leveraging the Hiroshima AI Process (HAIP) Reporting Framework as an Anchor, stakeholders can see where voluntary efforts dig into complementary areas and where rules can align and cohere with best practice.

Provide us with feedback to strengthen AI governance foundations

Together, the Stack, Map, and Anchors and Hooks frameworks are designed to support a shared understanding of the AI governance landscape and to provide a flexible foundation for more coherent, coordinated policymaking. This document presents a V0 draft (and preliminary version of the tools) that we intend to refine collaboratively with partners into a V1 release.

Achieving coherence requires collective effort. As part of the “who” of governance (those who design, implement, and are affected by governance), we must build effective tools together. When multistakeholder engagement that brings together policymakers, civil society, industry, and academia is done well—early, consistently, and meaningfully—it builds trust and consensus.

We are therefore:

- Inviting feedback from a broader community to understand how well these tools reflect the challenges and opportunities more broadly encountered, as well as how they might be improved to better serve shared goals. Please use this form to submit feedback.

- Convening a multistakeholder workshop for Partners within the PAI Community, collaborators of Microsoft and Brookings, policymakers, and specialized experts ahead of the India AI Impact Summit. Given coherence is more likely to succeed and to stick when it reflects broad-based input and deliberation, we will be convening a group of interdisciplinary stakeholders as part of this effort.