Co-Creating the Future of Responsible AI: Reflections from the All Partners Meeting

Co-Creating the Future of Responsible AI: Reflections from the All Partners Meeting

Over 200 gather for 3rd Annual PAI All Partner Meeting

Critical to the Partnership on AI’s (PAI) mission is our multistakeholder approach to creating AI for the benefit of people and society.

This approach was brought to life when PAI’s member community converged in London for the third annual All Partners Meeting (APM) at the end of September. Over the course of two days, more than 200 experts from academia, civil society, and global industry contributed their diverse perspectives to advance the Partnership’s mission of responsible AI.

- PAI’s Leadership Team shared how the organization’s values infuse their work, influence the strategic growth of the PAI Partnership, and orient the organization’s research and policy agendas.

- Presenters and participants jointly examined how the development and deployment of AI technology impacts people in multiple contexts, including the workforce, the media, public spaces, and criminal justice.

- Workshops, panels, and discussions addressed the complex issues surrounding fairness, transparency and explainability in AI systems, as well as the challenges of identifying, prescribing, and operationalizing best practices.

The event highlighted how the broad range of knowledge, experience, and perspectives provided by PAI’s cross-disciplinary and cross-cultural community fuels the insights that enable us to co-create a mutually beneficial future. It also affirmed our commitment to generating learnings and guidelines on responsible AI by bridging the gap between those affected by technologies and those building them.

Three Key Themes

Fireside Chat – The Role of Human and Civil Rights in PAI’s Work

The priorities and tone for the event were set early on in a Fireside Chat moderated by Madeleine Clare Elish (Data & Society), in which Angela Glover Blackwell (PolicyLink) and Dinah PoKempner (Human Rights Watch) discussed the role of civil and human rights in AI work. In a powerful example, Angela Glover Blackwell described the advantages of the curb cut effect, in which policies designed to address the needs of one vulnerable group end up benefiting society as a whole.

Their conversation introduced and touched on three key themes from the event:

- Proximity To The Problem – the need to understand AI in context, and to bridge the gap between those affected by technology and those building it.

- Negotiating Productive Disagreements – a diverse range of viewpoints and the ability to navigate productive disagreements are necessary to produce responsible AI.

- Envisioning a Positive Future – the goal of going beyond mitigating harm to developing a positive and inclusive vision of the world we want to co-create.

Proximity to the Problem

Proximity to those impacted by AI technologies, and the ability to hear their perspectives, is essential to coming up with effective practices in AI development, deployment and governance. As Lisa Dyer, PAI director of policy, explained, “knowing and appreciating the context in which AI operates is vital to shaping thoughtful and informed policies.”

PAI has several projects which examine the impact of AI technologies from the perspective of “experiential experts,” i.e. those most likely to be impacted by their use, such as our work in ABOUT ML, our Criminal Justice Report, our Visa Policy Paper, and the Worker Well-Being convening. PAI’s Criminal Justice Report, for instance, provides a set of requirements and recommendations for jurisdictions to consider when using algorithmic risk assessment tools in criminal justice settings.

Panel – Algorithmic Decision Making in Criminal Justice

This kind of algorithmic decision making is part of a broader conversation on using algorithms for ranking and scoring of human beings, which was explored in a working session, as well as in a panel moderated by Peter Eckersley, PAI director of research, in conversation with Ryan Gerety (Independent Researcher), William Isaac (DeepMind), Logan Koeptke (Upturn), and Alice Xiang (PAI).

Negotiating Productive Disagreements in High Impact Contexts

An essential aspect of PAI’s mission is bringing together views from a range of Partner organizations – technology companies, as well as academics, civil society organizations, policymakers, think tanks, and others. A series of panels, introduced and moderated by Rumman Chowdhury (Accenture), was designed to showcase the types of “productive disagreements” that emerge when navigating AI topics with the potential for high impact benefits as well as harms.

Productive Disagreements Session – Panel on Worker Wellbeing

The first of these sessions featured Pavel Abdur-Rahman (IBM), Christina Colclough (UNI GLOBAL), and Hiroaki Kitano (Sony Computer Science Laboratories), discussing perspectives from industry as well as from unions on productivity, wellbeing, digital transformation, and the implications of an aging society. This focus on worker well being is part of PAI’s explorations of AI, Labor and the Economy, and is another example of our efforts to better understand the impacts of AI and the perspectives of those affected.

Productive Disagreements Session – Panel on Responsible Publication Norms

The second session in this series explored the complex decisions surrounding responsible publication norms, and the tensions between openness of information and security. In this panel, Anders Sanberg (Future of Humanity Institute) and Andrew Critch (Center for Human-Compatible AI at UC Berkeley) discussed approaches to maximizing the many benefits of open scientific and technological research, while minimizing the risks of malicious use and harmful consequences of AI technologies.

Fireside Chat on Sensor Technologies

These socio-technical issues were also echoed in a conversation between Jennifer Lynch (EFF) and Lisa Dyer (PAI) on the implications of sensor technologies in public spaces, and the different narratives and possibilities around their use (smart cities vs surveillance state, for instance).

Productive Disagreements among Multiple Stakeholders

In order to produce guidelines or best practices for responsible AI, participants must collaboratively work through disagreements and diverse perspectives to find shared positions. This process is a key feature of PAI’s multistakeholder efforts, and was put into practice at working sessions throughout the event. These working sessions enabled partners to share expertise on benchmarks and best practices for fairness, ethics, and social good in Machine Learning and algorithmic decision making, as well as ways to best document and operationalize these insights. They also explored opportunities for leveraging AI for social good more broadly, and focused on current lightning rod issues such as Facial Recognition and Media Integrity.

Panel on Enhancing Transparency in Machine Learning Through Documentation

A recent example of this work is PAI’s project on enhancing transparency and accountability in Machine Learning through documentation, which was the subject of a working session, as well as a panel moderated by Eric Sears (MacArthur Foundation), featuring Hanna Wallach (Microsoft Research), Meg Mitchell (Google), Lassana Magassa (University of Washington Tech Policy Lab), Deb Raji (PAI), and Francesca Rossi (IBM).

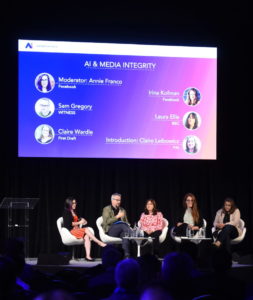

Panel on AI and Media Integrity

The impact of AI technologies on media and public discourse is another area where the cooperation and coordination of multiple stakeholders is required. This topic was addressed in a panel on AI and Media Integrity, moderated by Annie Franco (Facebook), with Laura Ellis (BBC), Claire Wardle (First Draft), Sam Gregory (WITNESS) and Irina Kofman (Facebook). PAI’s work in this area involves facilitating collaboration between newsrooms, media organizations, technology companies, civil society organizations, and academics to address emerging challenges, including convening a Steering Committee on AI and Media Integrity, and supporting a “DeepFakes Detection Challenge,” which will launch in 2020.

Proactively Envision a Positive Future

Terah Lyons (PAI) in discussion with Demis Hassabis (DeepMind)

The range of discussions at APM highlighted how considerations of ethics and responsibility in AI can reveal broader systemic problems and structures – and provide an opportunity to address them. An example of such an effort is PAI’s new research fellowship on diversity and inclusion in AI, supported by DeepMind, and announced at the meeting by DeepMind co-founder and CEO Demis Hassabis.

The event’s workshops and on stage conversations converged on a community call to action, what Julia Rhodes Davis, PAI director of partnerships, referred to as “moral imagination” – the need to be proactive, to move beyond avoiding harm towards claiming both agency and responsibility for the future we all want to live in. “I’m incredibly optimistic about what this community has already accomplished,” concluded PAI Executive Director Terah Lyons, “while also realizing that we have a long way to go to be truly successful. This work is, at its core, a forever project.”

Click here to view photos from the 2019 All Partners Meeting.