From Principles to Practices

Lessons Learned from Applying PAI’s Synthetic Media Framework to 11 Use Cases

![$hero_image['alt']](https://partnershiponai.org/wp-content/uploads/2024/11/case-studies-analysis-feature.png)

2023 was the year the world woke up to generative AI, and 2024 is the year policymakers will respond more firmly. In the past year, Taylor Swift fell victim to non-consensual deepfake pornography, and a misleading political narrative. A global financial services firm lost $25 million due to a deepfake scam. And politicians around the world have seen their likeness used to mislead in the lead up to elections.

In the U.S., on the heels of a White House Executive Order, NIST will be “identifying the existing standards, tools, methods, and practices… for authenticating content and tracking its provenance, [and] labeling synthetic content.” This policy momentum is taking place alongside real world creation and distribution of synthetic media. Social media platforms, news organizations, dating apps, courts, image generation companies, and more are already navigating a world of AI-generated visuals and sounds, already changing hearts and minds, as policymakers try to catch up.

How then can AI governance capture the complexity of the synthetic media landscape? How can it attend to synthetic media’s myriad uses, ranging from storytelling to privacy preservation, to deception, fraud, and defamation, taking into account the many stakeholders involved in its development, creation, and distribution? And what might it mean to govern synthetic media in a manner that upholds the truth while bolstering freedom of expression? To spur innovation while reducing harm?

What follows is the first known collection of diverse examples of the implementation of synthetic media governance that responds to these questions, specifically through PAI’s Responsible Practices for Synthetic Media. Here, we present a case bank of real world examples that help operationalize the Framework — highlighting areas synthetic media governance can be applied, augmented, expanded, and refined for use, in practice.

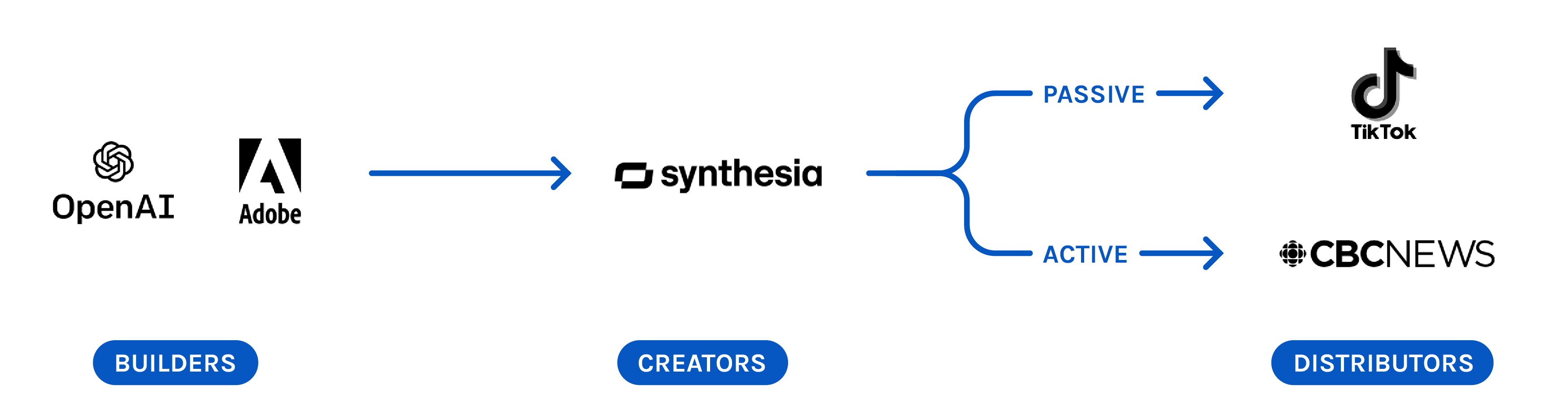

These eleven stakeholders are a seemingly eclectic group; they vary along many axes implicating synthetic media governance. But they’re all integral members of a synthetic media ecosystem that requires a blend of technical and humanistic might to benefit society. As Synthesia rightfully notes in their case, “No single stakeholder can enact system-level change without public-private collaboration.”

Some of those featured are Builders of technology for synthetic media, while others are Creators, or Distributors. Notably, while civil society organizations are not typically creating, distributing, or building synthetic media (though that’s possible), they are included in the case process; they are key actors in the ecosystem surrounding digital media and online information who must have a central role in AI governance development and implementation.

Read together, the cases emphasize distinct elements of AI policymaking and seven emergent best practices we explore below. They exemplify key themes that support transparency, safety, expression, and digital dignity online: consent, disclosure, and differentiation between harmful and creative use cases.

The cases not only provide greater transparency on institutional practices and decisions related to synthetic media, but also help the field refine policies and practices for responsible synthetic media, including emergent mitigations. Secondarily, the cases may support AI policymaking overall, providing broader insight about how collaborative governance can be applied across institutions.

The cases also accentuate several themes we put forth when we launched the Framework in 2023, like:

- Disparate institutions building, creating, and distributing synthetic media can share values, despite their differences and the need for distinct practices for enacting those values

- Governing a field as fast-paced and dynamic as synthetic media requires adaptability and flexibility

- Voluntary commitments should be a complement to, rather than a substitute for, government regulation.

Here, we offer emergent best practices from across cases, followed by brief analysis about the goals of the transparency case development, what PAI learned throughout the process, and how the cases will inform future policy efforts and multistakeholder work on synthetic media governance.

Download the Analysis