Overview

Avoidance of negative side effects is one of the core problems in AI safety, with both short- and long-term implications. It can be difficult enough to specify exactly what you want an AI to do, but it’s nearly impossible to specify everything that you want an AI not to do. As reinforcement learning agents get deployed to more complex and safety-critical situations, it’s important that we are able to set up safeguards to prevent agents from doing more than we intended them to.

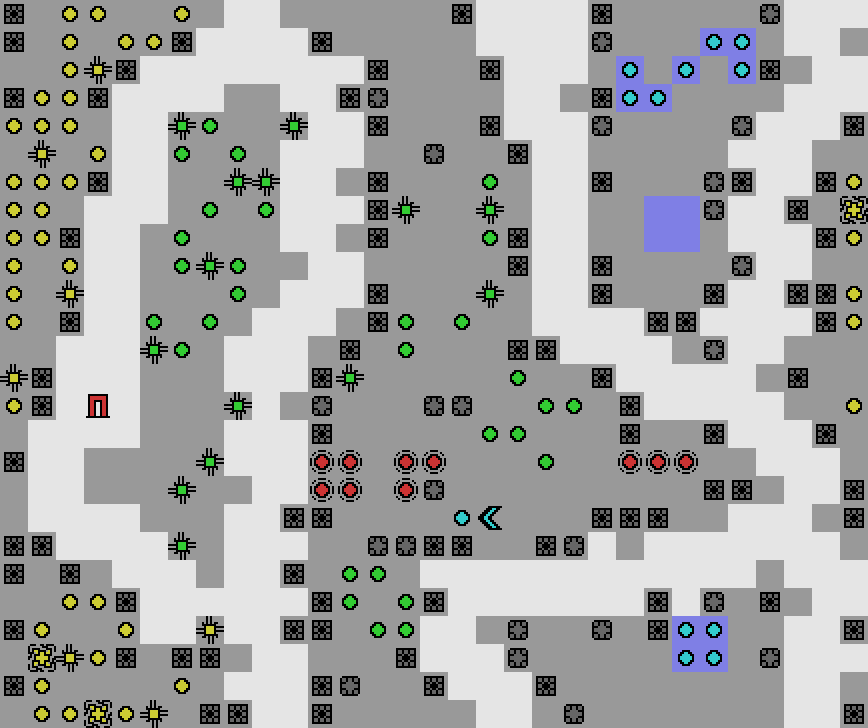

SafeLife is a novel reinforcement learning environment that tests the safety of reinforcement learning agents and the algorithms that train them. In SafeLife, agents must navigate a complex, dynamic, procedurally generated environment in order to accomplish one of several goals, providing a space to compare and improve techniques for training non-destructive agents.

This workstream is part of PAI’s efforts to develop benchmarks for safety, fairness, and other ethical objectives for machine learning systems. Since so much of machine learning is driven, shaped, and measured by benchmarks (and the datasets and environments they are based on), we believe it is essential that those benchmarks come to incorporate safety and ethics goals on a widespread basis.