Implementing Responsible Data Enrichment Practices at an AI Developer: The Example of DeepMind

Executive Summary

Executive Summary

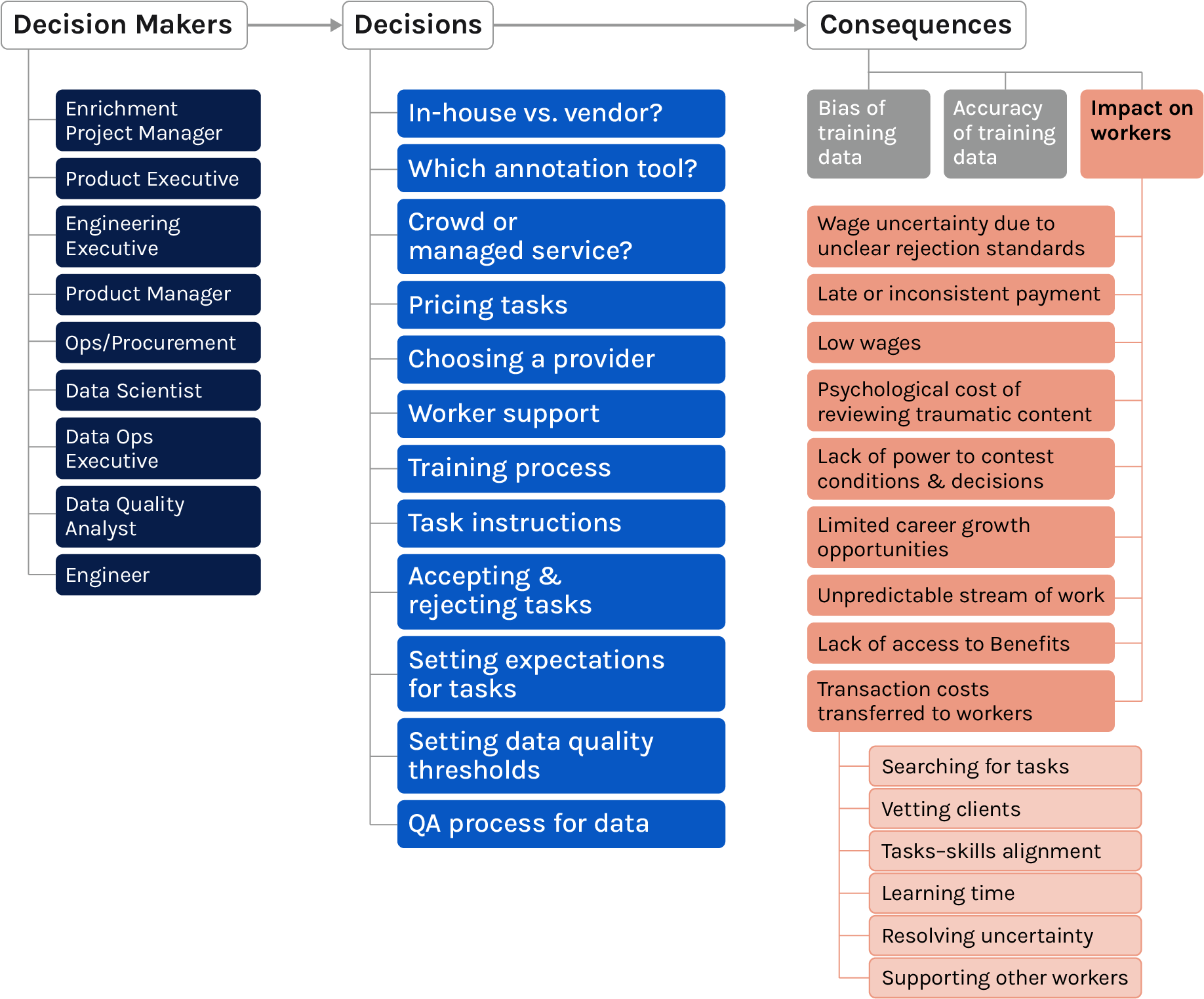

As demand for AI services grows, so, too, does the need for the enriched data used to train and validate machine learning (ML) models. While these datasets can only be prepared by humans, the data enrichment workers who do so (performing tasks like data annotation, data cleaning, and human review of algorithmic outputs) are an often-overlooked part of the development lifecycle, frequently working in poor conditions continents away from AI-developing companies and their customers.

Workers

Last year, the Partnership on AI (PAI) published “

Responsible Sourcing of Data Enrichment Services,” a white paper exploring how the choices made by AI practitioners could improve the working conditions of these data enrichment professionals. This case study documents an effort to put that paper’s recommendations into practice at one AI developer: DeepMind, a PAI Partner.

In addition to creating guidance for responsible AI development and deployment, PAI’s Theory of Change includes collaborating with Partners and others to implement our recommendations in practice. From these collaborations, PAI collects findings which help us further develop our curriculum of responsible AI resources. This case study serves as one such resource, offering a detailed account of DeepMind’s process and learnings for other organizations interested in improving their data enrichment sourcing practices.

After assessing DeepMind’s existing practices and identifying what was needed to consistently source enriched data responsibly, PAI and DeepMind worked together to prototype the necessary policies and resources. The Responsible Data Enrichment Implementation Team (which consisted of PAI and members of DeepMind’s Responsible Development and Innovation team, which we will refer to as “the implementation team” in this case study) then collected multiple rounds of feedback, testing the following outputs and changes with smaller teams before they were rolled out organization-wide:

Versions of these resources have been added to PAI’s responsible data enrichment sourcing library and are now available for any organization that wishes to improve its data enrichment sourcing practices.

Ultimately, DeepMind’s multidisciplinary teams developing AI research, including applied AI researchers (or “researchers” for the purposes of this case study, though this term might be defined differently elsewhere) said that these new processes felt efficient and helped them think more deeply about the impact of their work on data enrichment workers. They also expressed gratitude for centralized guidance that had been developed through a rigorous process, removing the burden for them to individually figure out how to set up data enrichment projects.

Data Enrichment

Data enrichment is curation of data for the purposes of machine learning model development that requires human judgment and intelligence. This can include data preparation, cleaning, labeling, and human review of algorithmic outputs, sometimes performed in real time.

Examples of data enrichment work:

Data preparation, annotation, cleaning, and validation:

Intent recognition, Sentiment tagging, Image labeling

Human review (sometimes referred to as “human in the loop”):

Content moderation, Validating low confidence algorithmic predictions, Speech-to-text error correction

While organizations hoping to adopt these resources may want to similarly engage with their teams to make sure their unique use cases are accounted for, we hope these tested resources will provide a better starting point to incorporate responsible data enrichment practices into their own workflows. Furthermore, to identify where the implemented changes fall short of ideal, we plan to continue developing this work through engagement and convenings. To stay informed, sign up for updates on PAI’s Responsible Sourcing Across the Data Supply Line Workstream page.

This case study details the process by which DeepMind adopted responsible data enrichment sourcing recommendations as organization-wide practice, how challenges that arose during this process were addressed, and the impact on the organization of adopting these recommendations. By sharing this account of how DeepMind did it and why they chose to invest time to do so, we intend to inspire other organizations developing AI to undertake similar efforts. It is our hope that this case study and these resources will empower champions within AI organizations to create positive change.