AI and Elections: Initial Insights from PAI’s Community of Practice

![$hero_image['alt']](https://partnershiponai.org/wp-content/uploads/2024/05/elections-blog-1800x832-1.gif)

With over 70 elections happening globally in 2024, including the high-stakes U.S. presidential election, many are concerned about the impact of synthetic media, and AI tools more broadly, on democratic processes. Synthetic media can be visual, auditory, or multimodal content that has been generated or modified, most commonly by artificial intelligence – note that non-AI generated forms of manipulation must also be considered in the context of elections.

We have already begun to see elections from India to Europe impacted by the development and spread of sophisticated synthetic media, with political officials’ likenesses being used to spread unsanctioned messages. In January, voters in New Hampshire received a robocall using President Joe Biden’s voice, urging them to not vote in the primary election. Our team recently examined the use of deepfake audio in three global election contexts and found that generative AI tools are already playing a role in the 2024 global elections cycle. Deepfake content such as altered images, videos, or audio clips, like the Biden robocall, can be shared widely and quickly to affect elections by impacting voting procedures, candidate narratives, and voter sentiments. Until recently, the access and use of deepfake and generative AI tools has been fairly limited to those with technological know-how. The democratized access to tools that can produce and manipulate media, along with their greater realism and sophistication, leads to the need for further guidance and guardrails for those building, distributing, and creating synthetic content. If responsible synthetic media best practices, such as disclosure, are not implemented alongside safety recommendations for open source model builders, synthetic media may lead to real-world harm, such as manipulating democratic and political processes.

Since February 2024, PAI has held monthly Community of Practice (COP) meetings to explore how different stakeholders are addressing the challenges posed by the use of AI tools in elections. This multistakeholder approach to addressing issues in this space is what allows PAI to bring together communities that become catalysts for change. The COP convenings are in-depth conversations with a curated group of stakeholders directly involved in the field of AI and elections. The meetings provide an avenue for members of the PAI Community of Practice to present their ongoing efforts related to AI and elections, receive feedback from their peers, and discuss difficult questions and tradeoffs when it comes to deploying this technology.

Meedan’s Mission to Combat Misinformation

Our first meeting in the series began with Meedan, a technology nonprofit dedicated to strengthening journalism, digital literacy, and the accessibility of trusted information online and off on a global scale. Meedan shared feedback from their global network of civil society organizations around threats to providing access to clear and factual information for effective civic engagement and healthy democracy, including the accelerated use of AI in mis- and disinformation. Of course, synthetic content is only one mechanism for spreading falsehood, and Meedan meaningfully explored the many ways targeted or otherwise harmful narratives and ideas take shape and spread.

The Meedan team confirmed the difficulty in addressing inaccurate information being shared across a number of search, social, and chat platforms in particular, based on feedback from journalists, civil society organizations, and audiences worldwide. In particular, platforms for closed media messaging are unable to moderate content directly, making them more vulnerable to inaccurate or harmful information sharing. For example, text messaging is largely unregulated, creating avenues to reach voters and audiences without the ability to verify or check the information being shared. Meedan believes that all audiences should have on-demand access to relevant and verifiable information on channels of their choice, related to elections and beyond. That is why Meedan created access to fact-checks on closed messaging apps providing real time access to vetted information from journalists. Any guidance for dealing with misinforming content must attend to the different mediums through which people access information, not just the largest technology platforms and social media websites.

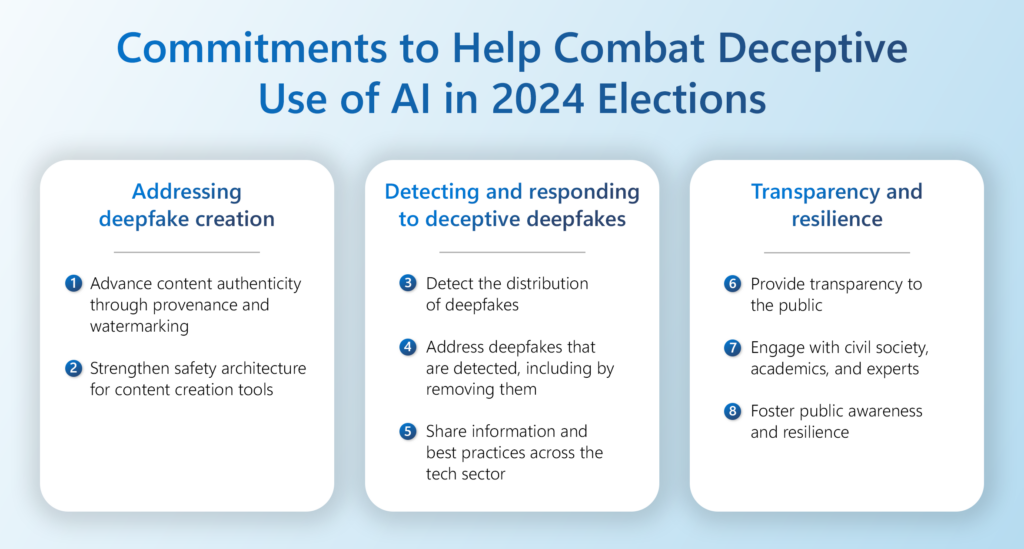

Microsoft’s Commitment to Combating Deepfakes

In the second meeting of the series, Microsoft highlighted their commitment to combating deepfakes and the spread of misinformation during election cycles. They described their contribution to the Tech Accord to Combat Deceptive Use of AI in 2024 Elections, a coalition of 23 signatory companies ranging from synthetic media developers to social media platforms that aims to provide shared and robust content authenticity and disclosure; doing so would ensure that candidates are able to authenticate content that they created. Signatories also commit to detecting and combating content that is spreading misinformation or impersonating political actors. In addition to supporting indirect disclosure, which PAI has advocated for, such as watermarking and following C2PA technical standards, Microsoft is working on providing clear indicators of manipulated or deceptive information for end-users, or direct disclosures. Notably, many of the themes in the Tech Accord map onto existing norms set forward in PAI’s Responsible Practices for Synthetic Media: A Framework for Collective Action, and future work must deepen understanding of how audiences and the public make sense of an increasingly AI-augmented media environment. The FBI also plays a critical role in information sharing across tech and government apparatuses as well as in detecting deceptive and fake content when it comes to elections and foreign interference. This then creates the impetus for more regulation on that front which is critical for this space.

Image via Microsoft – new tech accord blog

The Brennan Center’s Call for Regulation

The third meeting of the series was held with The Brennan Center for Justice, a nonprofit law and public policy institute at New York University School of Law. In this meeting, the Brennan Center focused on U.S. elections and emphasized how regulation may address the threat of deepfakes in the political arena, describing methods for mandating labels, indirect disclosures (such as watermarks and signed metadata), and even possible bans as default measures for certain uses of deepfakes. Conversations focused on the importance of protecting candidates and election workers from online harassment while improving the quality of political discourse. While regulatory action is predominantly happening at the state level in the U.S., bipartisan interest to create regulations around the electoral use of deepfakes is encouraging a path in the right direction. Regulation must incorporate platforms and developers as key players in distributing misinforming and deepfake content.

Consistent Themes Across the Board

Throughout the different conversations in the series, common threads have emerged that warrant further exploration.

- While AI accelerates and deepens existing election threats, we must remember that we do not need AI to cast doubt on narratives and manipulate election processes. (see here for more from PAI on this, in our comment to the Federal Election Commission)

- Closed messaging platforms present distinct risks when countering election misinformation and should be considered as distinct surfaces when crafting AI policies.

- Regulation should consider the need to preserve free expression while mitigating falsehood and harm. Supporting indirect disclosures and labels, rather than bans, is a meaningful route for balancing these values.

- In the United States, much of the synthetic media regulation is taking place at the state level, though there is increasing federal momentum around synthetic content.

- Broader public education on synthetic content is required for any of the artifact-level interventions, like labels, to be effective (a theme that also emerged in PAI’s recent analysis on Case Studies for Synthetic Media)

- The 2024 elections require multistakeholder collaboration to address the challenges AI poses to democracy.

What’s Next for the COP

With three more meetings on the horizon, our focus will broaden to voices from news organizations covering AI in elections, tech platforms, and civil society organizations. These meetings aim to showcase proactive measures various stakeholders are undertaking to inform the public about the use of AI during elections and mitigate potential harms, equipping the broader ecosystem with the knowledge and methods for safeguarding elections. As our COP meetings continue, we remain committed to fostering dialogue, collaboration, and action to ensure the integrity and fairness of electoral processes in an increasingly AI-driven world.

To stay up to date on how PAI is shaping the future of AI, sign up for our newsletter.